Following years of feedback and test iteration with our customers, partners, and the AI engineering community, our machine learning benchmark is now ready for general use, and it has a new name: Geekbench AI.

If you’ve followed along with prior preview releases, then you’re familiar with the basic premise. Still, in short, Geekbench AI is a benchmarking suite with a testing methodology for machine learning, deep learning, and AI-centric workloads, all with the same cross-platform utility and real-world workload reflection that our benchmarks are well-known for. Software developers can use it to ensure a consistent experience for their apps across platforms, hardware engineers can use it to measure architectural improvements, and everyone can use it to measure and troubleshoot device performance with a suite of tasks based on how devices actually use AI.

Geekbench AI 1.0 is available through our downloads page for Windows, macOS, and Linux, as well as on both the Google Play Store and the Apple App Store.

What’s in a Name?

We called prior preview releases for our machine learning benchmark “Geekbench ML.” But in recent years, companies have coalesced around the term “AI” for these workloads (and their related marketing). To ensure that everyone, from engineers to performance enthusiasts, understands what this benchmark does and how it works, it was time for an update.

High Score

Measuring performance is, put simply, really hard. That’s not because it’s hard to run an arbitrary test, but because it’s hard to determine which tests are the most important for the performance you want to measure – especially across different platforms, and particularly when everyone is doing things in subtly different ways. At Primate Labs, we build our tests to reflect the sort of use cases that developers build their applications to do through detailed and ongoing conversations with software and hardware engineers across the industry, rather than just crunching basic math for hours.

Back in the 90s and 2000s, if you played PC games, your video card wasn’t just about the raw computing power of the GPU. It could have proprietary hardware accelerator chips, manufacturer-specific developer libraries to add or enhance game effects, and variable quality of support for software frameworks like Direct3D. As a consumer, trying to figure out which card to buy was hard because different games would support different video cards in different ways — you might want the latest Direct3D on your GPU for one title, but Glide for another, and perhaps there was no single GPU on the market that met all your needs. Everything was a trade-off.

AI takes this problem and turns up the complexity dial to 11.

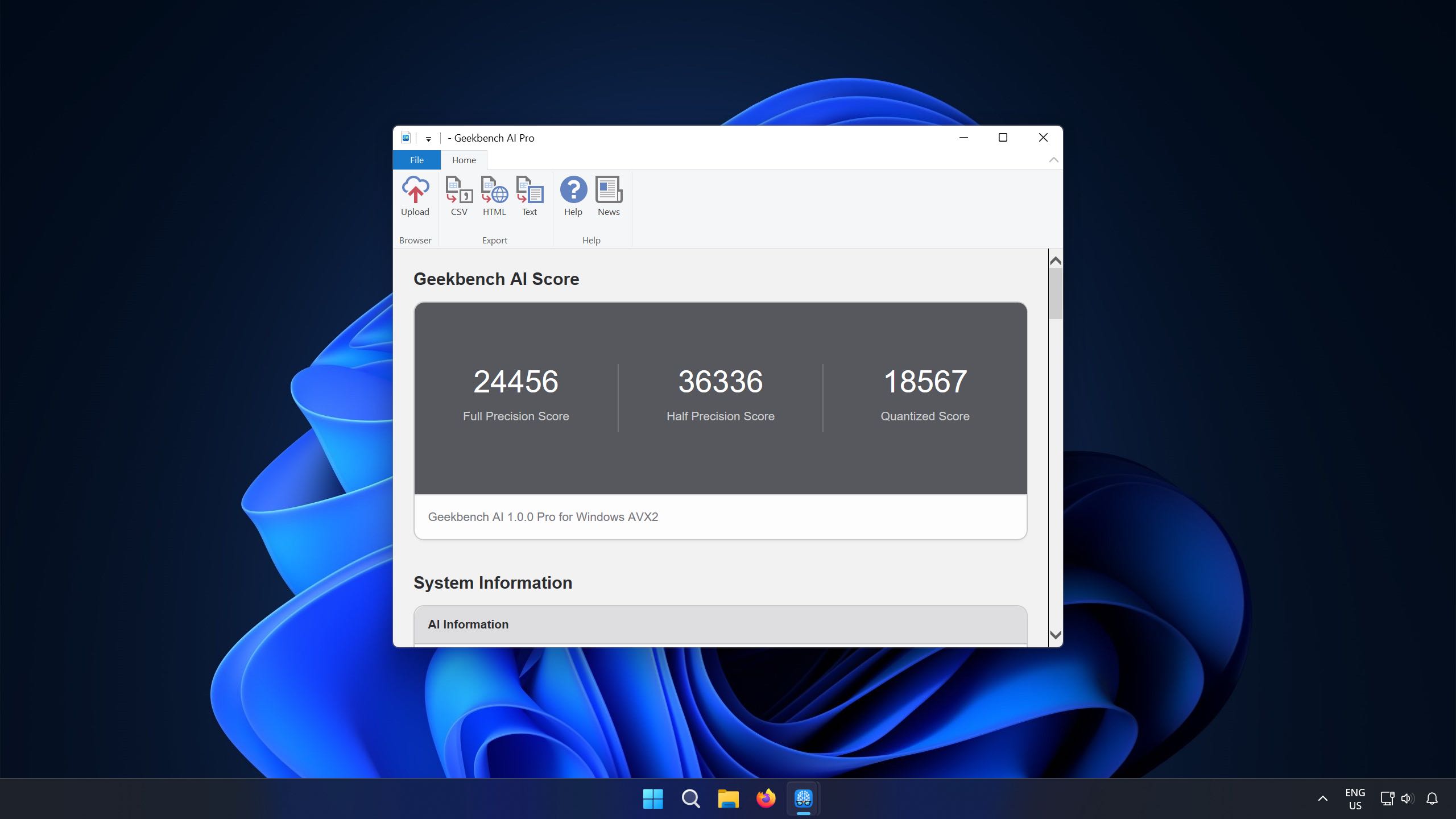

To accommodate this complexity, Geekbench AI provides three overall scores (as shown above). When we decided to include three performance scores in Geekbench AI, we did it because AI hardware design varies between different devices and silicon vendors, as does how developers leverage that hardware. Just as CPU-bound workloads vary in how they can take advantage of multiple cores or threads for performance scaling (necessitating both single-core and multi-core metrics in most related benchmarks), AI workloads cover a range of precision levels, depending on the task needed, the hardware available, and the frameworks in between. Geekbench AI gives you the insight a developer or a hardware vendor would look for when benchmarking a given device’s AI performance by showing you performance across three dimensions instead of just one. This is part of what we mean when we say Geekbench AI is designed to measure AI performance in the real world: AI is complex, heterogeneous, and changing very fast. With our benchmark, you can explore how different approaches at the hardware level have been optimized for particular tasks.

We recognize this is tricky, and one day, measuring AI performance will probably get easier. But rather than pretending this stuff is simpler than it is, we took the same approach with Geekbench AI that we did with the original Geekbench: Recognizing that workload performance is a function of both hardware capability and workload characteristics, and that different workloads exercise hardware differently. Without realistic workloads, you will not have a realistic understanding of hardware performance.

Speed Plus Accuracy

Relatedly, Geekbench AI’s workload scores also include a new accuracy measurement on a per-test basis. That’s because AI performance isn’t only tied to how quickly a given workload runs, but also how close its outputs are to the truth — in other words, how accurately that model can do what it’s supposed to do.

For example, your hotdog object detection model might be able to run very quickly, but if it can only accurately detect a hotdog 0.2% of the time one is actually present, it’s not very good. This accuracy measurement can also help developers see the benefits and drawbacks of smaller data types, which can increase performance and efficiency at the expense of (potentially!) lower accuracy. Comparing accuracy as part of performance through the use of our database can also help developers estimate relative efficiency.

Frameworks, Datasets, and More

Geekbench AI 1.0 includes other significant changes to enhance its ability to measure real-world performance based on how applications are using AI. This includes support for new frameworks, from OpenVINO on Linux and Windows to vendor-specific TensorFlow Lite delegates like Samsung ENN, ArmNN, and Qualcomm QNN on Android to better reflect the latest tools available to engineers and the changing ways that developers build their apps and services on the newest hardware.

This release also uses more extensive data sets that more closely reflect real-world inputs in AI use cases, and these bigger and more diverse data sets also increase the effectiveness of our new accuracy evaluations. All workloads in Geekbench 1.0 run for a minimum of a full second, which changes the impact of vendor and manufacturer-specific performance tuning on scores, ensuring devices can hit their maximum performance levels during testing while still reflecting the bursty nature of real-world use cases.

More importantly, this also better considers the delta in performance we see in real life; a five-year-old phone is going to be a LOT slower at AI workloads than, say, a 450W dedicated AI accelerator. Some devices can be so incredibly fast at some tasks that a too-short test counter-intuitively puts them at a disadvantage, underreporting their actual performance in many real-world workloads!

Additional Information

More detailed technical descriptions of our workloads and models are available in the Geekbench AI 1.0 Inference Workloads document. As always, all our tests are based on well-understood and widely used industry practices and standards for accurate testing methodology. And Geekbench AI is integrated with the Geekbench Browser for ease of cross-platform context and comparison. You will be able to compare top-performing devices on the Geekbench AI Benchmark Chart or check out the most recent results on the Latest Geekbench AI Results page.

With the 1.0 release, we think Geekbench AI has reached a level of dependability and reliability that allows developers to confidently integrate it into their workflows — many big names like Samsung and Nvidia are already using it. But AI is nothing if not fast-changing, so anticipate new releases and updates as needs and AI features in the market change. (And if you’re a developer with a hyper-specific AI or ML workflow need that isn’t currently addressed, please let us know! Drop by our Discord, shoot us an email, or tag us on social media so we can make Geekbench AI the best tool for you, specifically.)

After half a decade of engineering, we’re proud of the work we’ve accomplished with Geekbench AI. The pace of development in machine learning, deep learning, and AI right now is very fast, and our benchmark will continue to iterate and change in the coming years to reflect the ways developers and engineers build their products, and how consumers use them.

We invite you to download and use Geekbench AI 1.0 today on Android, iOS, and Desktop.